AI News

Apple released weirdly coding language model

Apple just quietly put out a new AI coding tool called DiffuCode-7B-cpGRPO on Hugging Face. This model is pretty cool because it can write code in a different way than usual, and it works just as well as other top coding AIs out there.

Apple AI Model

Here’s the thing – most AI models that write code do it the same way people read: left to right, top to bottom. That’s because these AI systems work by taking your question, figuring out the first word of the answer, then going back and reading your question plus that first word to figure out the second word, and they keep doing this over and over.

But Apple’s new model can actually write code out of order, which is pretty innovative and could make coding faster and more flexible.

LLM also has a setting called “Temperature” that controls the randomness of the output. After predicting the next token, the model assigns probabilities to all possible options.

A lower temperature means that the most likely token is more likely to be chosen, while a higher temperature gives the model more freedom to choose less likely tokens.

Another option is the Diffusion model, which is commonly used for image models. In short, the model starts with a blurry, noisy image and iteratively removes the noise while taking into account the user’s needs, gradually guiding it to an image closer to the user’s request.

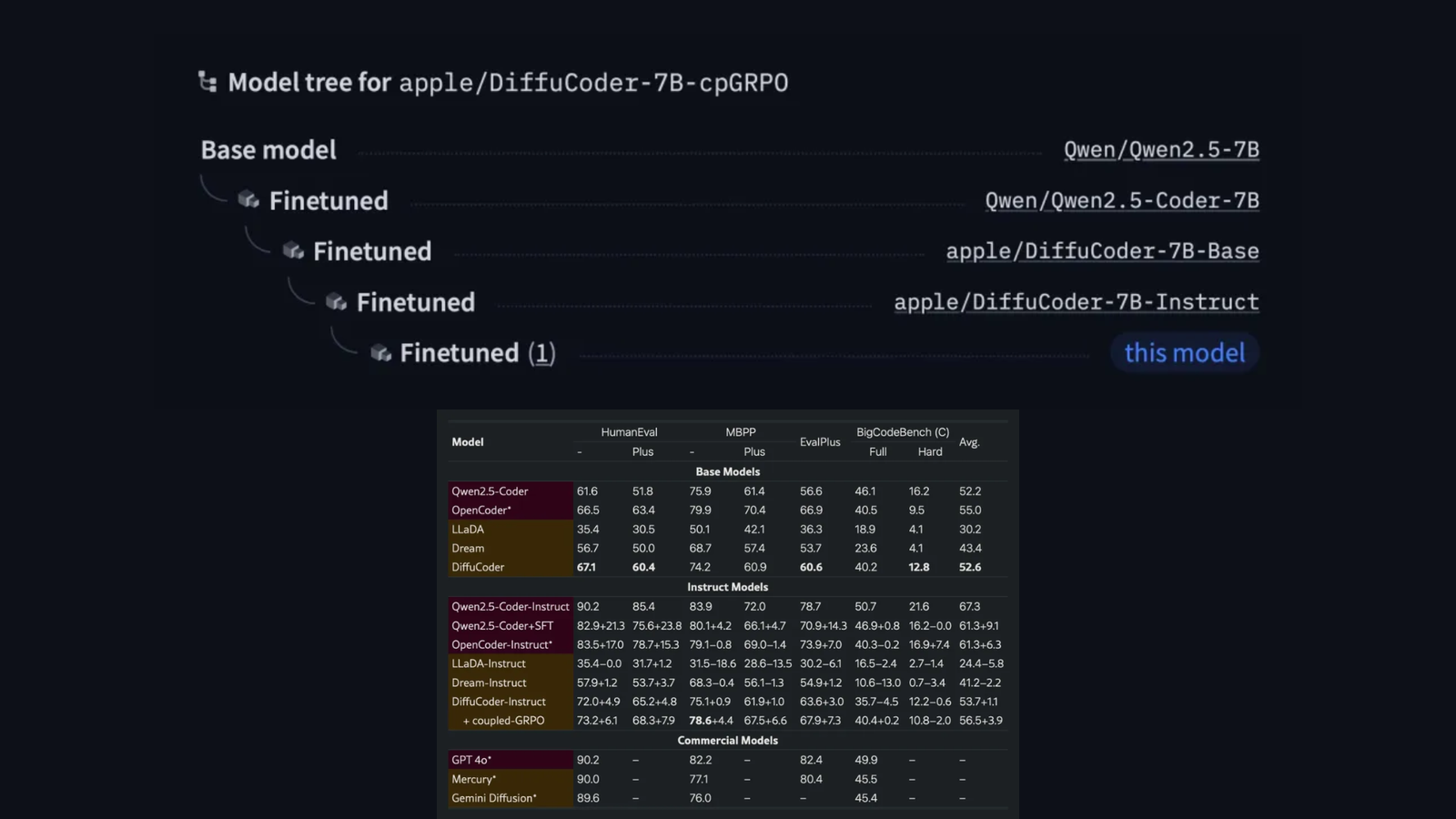

The model released by Apple is called DiffuCode-7B-cpGRPO, and it is based on a paper published last month titled “DiffuCoder: Understanding and Improving Masked Diffusion Models for Code Generation.”

The paper describes a code generation model that adopts a diffusion-first strategy, but with one special feature: when the sampling temperature increases from the default 0.2 to 1.2, DiffuCoder becomes more flexible in the order of generating tokens, thus getting rid of the strict left-to-right constraint.

Built on top of an open-source LLM by Alibaba

More interestingly, Apple’s model is built on top of Qwen2.5‑7B, an open-source foundation model from Alibaba. Alibaba first fine-tuned that model for better code generation (as Qwen2.5‑Coder‑7B), then Apple took it and made its own adjustments.

They turned it into a new model with a diffusion-based decoder, as described in the DiffuCoder paper, and then adjusted it again to better follow instructions. Once that was done, they trained yet another version of it using more than 20,000 carefully picked coding examples.

Apple DiffuCoder

In the mainstream programming benchmark, DiffuCode-7B-cpGRPO maintains the principle of not strictly relying on the left-to-right generation method when generating code, and its test score is improved by 4.4% compared with the mainstream diffusion-based programming model.

AI News

YouTube rolls out new AI-powered tools for Shorts creators

YouTube has officially announced the new AI-driven creation tools for generating the unique and best Shorts, according to a recent blog post by the platform.

The new features include a Photo to video converter, generative effects, and access to an AI playground for experimenting with creative outputs.

Photo to video tool

The Photo to video tool allows users to transform still images from their camera roll into animated Shorts. Users can select a photo and apply creative suggestions that add motion, such as animating landscapes, objects or group pictures.

This feature is being rolled out across the United States, Canada, Australia and New Zealand, with more regions expected to follow later in the year. For your information, it is available for free.

Both the Photo to video and generative effects are powered by Google’s Veo 2 technology. YouTube said Veo 3 would be integrated into Shorts later this summer.

The feature is currently available in the US, Canada, Australia and New Zealand and can be accessed by tapping the create button, followed by the sparkle icon.

YouTube noted that AI-generated content will include SynthID watermarks and clear labels to indicate that it was created using artificial intelligence.

According to the blog post, the new tools are designed to make the creative process more accessible, while preserving transparency about AI use in content creation.

AI News

Google Expands Firebase Studio with AI Tools for Popular Frameworks

Google has officially released a series of updates to Firebase Studio aimed at expanding its AI development capabilities and deepening integration with popular frameworks and Firebase services.

For your information, the released features were unveiled at I/O Connect India.

At the core of the update are AI-optimised templates for Flutter, Angular, React, Next.js, and general Web projects. These templates enable developers to build applications in Firebase Studio using Gemini, Google’s AI assistant, with the workspace defaulting to an autonomous Agent mode.

“We’re unveiling new updates that help you combine the power of Gemini with these new features to go from idea to app using some of your favourite frameworks and languages,” said Vikas Anand, director of product management at Google.

Firebase Studio now supports direct prompting of Gemini to integrate backend services. Developers using App Prototyping Agent or an AI-optimised template can simply describe the desired functionality, and Gemini will recommend and incorporate relevant Firebase services, including adding libraries, modifying code, and assisting with configuration.

“You can get assistance from Gemini to help you plan and execute tasks independently without waiting for step-by-step approval,” said Jeanine Banks, vice president and general manager, Developer X at Google.

AI News

Nvidia, AMD to Resume AI Chip Sales to China in US Reversal

Nvidia reportedly plans to resume sales to China that’s become part of a global race pitting the world’s biggest economies against each other. The company’s announcement on Monday comes after Nvidia CEO Jensen Huang met with President Donald Trump at the White House last week.

AMD AI Chip Plan For China

AMD also planning to restart sales of its AI chips to China. “We were recently informed by the Department of Commerce that license applications to export MI308 products to China will be moving forward for review,” the company said in a statement to CNN. “We plan to resume shipments as licenses are approved. We applaud the progress made by the Trump Administration in advancing trade negotiations and its commitment to US AI leadership.”

Treasury Secretary Scott Bessent told Bloomberg in an interview Tuesday that the Nvidia export controls have been a “negotiating chip” in the larger US-China trade talks, in which the two countries have made a deal to lower tariffs charged on one another.

The same day Commerce Secretary Howard Lutnick said that the resumption of Nvidia’s AI chip sales to China was part of the trade agreement with Beijing on rare earths. “We put that in the trade deal with the magnets,” he told Reuters, referring to rare earth magnets.

“In order for America to be the world leader, just like we want the world to be built on the American dollar, using the American dollar as a global standard, we want the American tech stack to be the global standard,” Huang told CNN’s Fareed Zakaria in an interview that aired Sunday. “We love that the internet is created by American technology and is built on American technology, and so we should continue to aspire to that.”

AI News7 months ago

AI News7 months agoTurn Photos into Videos Using Google Gemini AI

AI News7 months ago

AI News7 months agoGoogle Expands Firebase Studio with AI Tools for Popular Frameworks

AI News7 months ago

AI News7 months agoApple New AI Model Can Detect Pregnancy With 92 percent

AI News7 months ago

AI News7 months agoGoogle hires Windsurf execs in $2.4 billion deal

AI Tutorial7 months ago

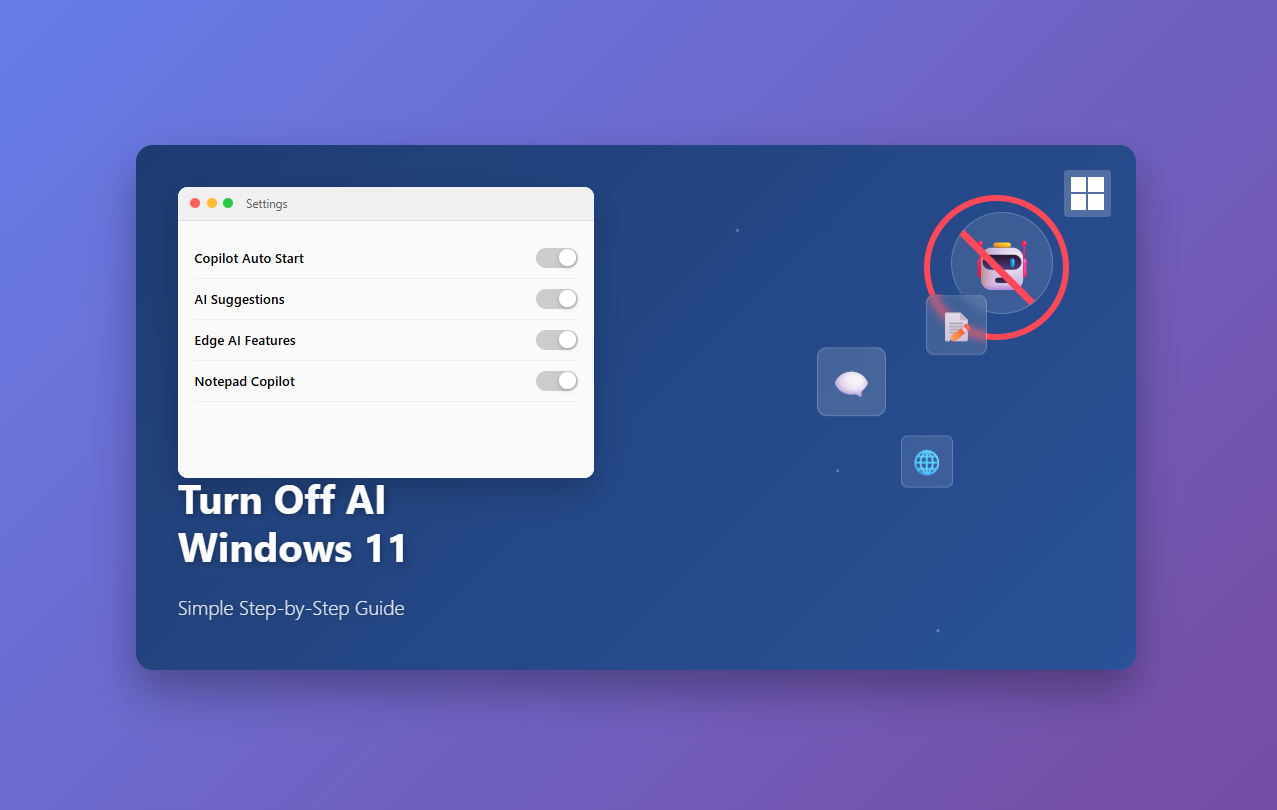

AI Tutorial7 months agoHow to Turn Off Microsoft AI Features

AI News7 months ago

AI News7 months agoOpenAI has now restored the services after outage

AI News6 months ago

AI News6 months agoYouTube rolls out new AI-powered tools for Shorts creators

AI Tools7 months ago

AI Tools7 months agoIs This Simple Note-Taking App the Future of AI?